How to develop an Augmented Reality app on Android

Augmented reality applications show the view of the real

world with information superimposed on them. There are many apps on Google Play

which uses Augmented Reality.

Street Lens shows interesting places in the direction where the phone is pointing. It basically shows the camera view and superimposes the place information on top of it based on which direction the phone is pointing to.

Similarly, Google Sky Map shows the stars and planets in the direction the phone points. Here again, the app just displays images based on how the phone is held.

Augmented Reality applications have the following basic steps

- Detect the mobile orientation and direction

- Display a background image based on the orientation and direction

- Superimpose relevant information on the image

When I say the direction to which the phone is pointing to, I mean the direction at which the back camera of the phone is pointing to since for Augmented Reality the back camera is the eye of the phone.

Detecting the Mobile orientation and direction

The orientation of the device can be either portrait or

landscape. If the height is greater than the width then the orientation of the

device is portrait, otherwise it is landscape.

The most important part of the Augmented Reality

applications is to know the position of the phone in the 3D world. In other

words, the app should know how the phone is held by the user so that the app

can show the content based on the direction the phone is pointing to.

Android SDK provides APIs for Accelerometer and Magnetometer

and using these sensors the direction of the phone can be determined.

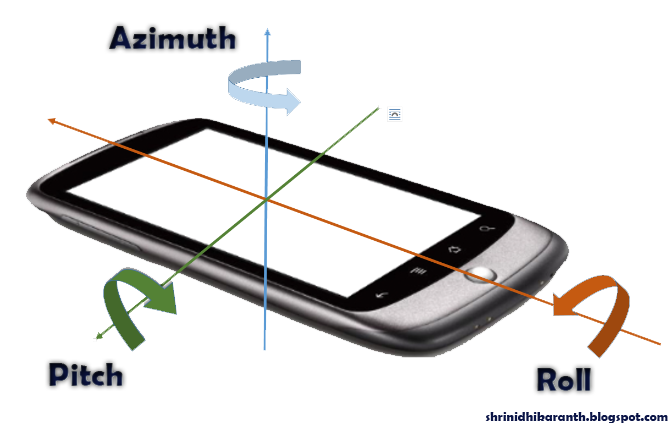

Using the accelerometer and magnetometer sensor values, we

can obtain the Azimuth, Pitch and Roll values.

Azimuth value is the most important of the 3 since it tells

how the mobile is aligned to the magnetic poles (north, south, east &

west). The value ranges from -Pi radians to +Pi radians. Azimuth is –Pi when

the phone is pointing to south. It is 0 when the phone points to north. –Pi/2

when it points to west and +Pi/2 when it points to east.

Roll value tells how the mobile is held with respect to the

ground. Roll value is 0 when the phone is parallel to ground with camera facing

down and it is –Pi or +Pi when the camera is facing up.

Hold the phone parallel to the ground with the camera facing

down. Now rotate the phone such a way that the phone remains parallel to the

ground. This rotation can be measured using the Pitch values.

Imagine a big sphere around your phone. Any point in this

sphere can be identified by using one of the 3 values (Azimuth, Pitch and

Roll). From the phone’s sensor values, you can exactly find out where the phone

is pointing to.

Even though these sensor APIs are available in Android SDK, the

sensor’s functionality and accuracy depends on the hardware. For example, in

case of Micromax Canvas 1, the roll value is always 0.

Here is a sample Android Java code which reads the Azimuth,

Pitch and Roll of the device

public class TiltDetector implements

SensorEventListener

{

public float swRoll;

public float swPitch;

public float swAzimuth;

public SensorManager mSensorManager;

public Sensor accelerometer;

public Sensor magnetometer;

public float[] mAccelerometer = null;

public float[] mGeomagnetic = null;

public

TiltDetector(Context context)

{

mSensorManager =

(SensorManager)context.getSystemService(Context.SENSOR_SERVICE);

accelerometer = mSensorManager.getDefaultSensor(Sensor.TYPE_ACCELEROMETER);

magnetometer = mSensorManager.getDefaultSensor(Sensor.TYPE_MAGNETIC_FIELD);

mSensorManager.registerListener(this, accelerometer, SensorManager.SENSOR_DELAY_GAME);

mSensorManager.registerListener(this, magnetometer, SensorManager.SENSOR_DELAY_GAME);

swRoll = 0;

swPitch = 0;

swAzimuth = 0;

}

public void Clear()

{

mSensorManager.unregisterListener(this, accelerometer);

mSensorManager.unregisterListener(this, magnetometer);

}

public void

onAccuracyChanged(Sensor sensor, int accuracy)

{

}

@Override

public void

onSensorChanged(SensorEvent event)

{

// onSensorChanged gets called for

each sensor so we have to remember the values

if (event.sensor.getType() ==

Sensor.TYPE_ACCELEROMETER)

{

mAccelerometer = event.values;

}

if (event.sensor.getType() ==

Sensor.TYPE_MAGNETIC_FIELD)

{

mGeomagnetic = event.values;

}

if (mAccelerometer != null && mGeomagnetic != null)

{

float R[] = new float[9];

float I[] = new float[9];

boolean success =

SensorManager.getRotationMatrix(R, I, mAccelerometer, mGeomagnetic);

if (success)

{

float orientation[] =

new float[3];

SensorManager.getOrientation(R,

orientation);

// at this point, orientation contains

the azimuth, pitch and roll values.

swAzimuth = (float) (180 *

orientation[0] / Math.PI);

swPitch = (float) (180 *

orientation[1] / Math.PI);

swRoll = (float) (180 *

orientation[2] / Math.PI);

}

}

}

}

Displaying a background Image based on the position of the phone

When the app knows where exactly the phone is pointing to,

the next step is to display appropriate image. The image to be displayed

depends on the type of application. Street Lens app, for example, displays the

camera view itself.

Suppose you are standing in front of Nasdaq stock market in

New York City and the phone is pointing to the Nasdaq stock market. Using the

camera view, the exact view that the camera view can be displayed on the app.

Superimpose relevant information on the image

Google Sky Map displays dynamically generated content based

on the position of the stars at given time. Street Lens displays the

restaurants, building names, etc based on the current location of the user and

the direction at which the camera points. So the Augmented Reality application

should show relevant info for the given direction of the phone.

Using GPS the exact location (latitude & longitude) of

the device can be known and using sensor values as mentioned above, the

direction the device is pointing to can be obtained. Coupled with these

information, now any information of the real world can be shown on the mobile

screen as if the information are displayed right in front of you and not on the

mobile.

Many mobile users believe that the Augmented Reality app

parses the image from the camera to decide the orientation and direction but as

you see the reality is that the direction and position are obtained using the

Accelerometer and Magnetometer sensors.